LCEL - A unique GenAI programming language

Thanks to LCEL, it is now much more easier for us to develop GenAI applications. It offers a powerful approach to design processing chains, which means minimizing our programming effort and focusing on creating innovative solutions.

Bài viết này có phiên bản Tiếng Việt

What is LCEL?

In today's digital age, the widespread adoption of Generative AI (GenAI) systems and applications has opened a new horizon in technology, marking a significant advancement in solving complex problems across various fields. However, despite the robust development and tremendous potential that GenAI offers, these systems and applications also face significant challenges, such as:

- Complex Design Flows: In complex systems, the use of different methods by each component creates significant barriers in connecting and interacting between components.

- Long Response Times: In GenAI-powered chat applications, waiting for responses often results in a less smooth, less "real" user experience.

- Poor Performance: In systems that need to query reference documents (like RAG) or fetch data from 3rd party APIs, the requirement for parallel processing to improve performance is crucial but often challenging to implement.

- Difficulty in Using Custom Functions: Integrating custom logic processing functions into an existing framework is often difficult due to compatibility issues or complexity in integration.

- Debugging and Logging: During development, tracking intermediate processing information and handling specific events often pose significant challenges.

- Limited Customization: Customizability is a key value for platform-oriented systems. The need to customize and adapt to new requirements is essential. However, this often faces limitations due to a lack of flexibility in configuration and expansion.

In this context, the LangChain Expression Language (LCEL), a declarative framework, helps transform complex code segments into simple syntax, enabling rapid and flexible development of processing flows in GenAI applications. Overall, LCEL supports capabilities such as:

- Unified Interface: LCEL introduces the Runnable interface, a unified solution for calling methods for components, opening up the ability to design processing flows easily and flexibly. See more in Basic Usecase.

- Streaming: LCEL's streaming capability significantly reduces waiting times, providing a better experience. For applications requiring JSON return formats, LCEL also offers an auto-complete feature, optimizing Generative AI processing time. See more in Streaming.

- Parallel Processing: LCEL allows for the simple and intuitive construction of parallel task execution chains. This significantly enhances processing performance, especially in multi-step processing flows. See more in RAG - Retrieval-Augmented Generation

- Custom Function Integration: With support for integrating custom functions through simple wrapper functions, LCEL opens up high customization opportunities, allowing developers to easily add specialized processing into the overall flow. See more in Branching and Merging.

- Debugging and Logging: LCEL provides powerful tools for logging and debugging through event recording, helping developers easily monitor and troubleshoot issues. See more in Debug chain.

- High Customizability: LCEL stands out with its high degree of customization, from customizing models and prompts to adding new customizations into the processing chain. This not only serves well for logic and testing purposes but also expands the system's application possibilities in specific scenarios. See more in Alternative configuration.

In this article, let's explore LCEL through various use cases, focusing on building Chain, or processing flow.

Read more: LangChain: Powerful framework for Generative AI

Demo

Basic Usecase

We'll start with creating a basic chain:

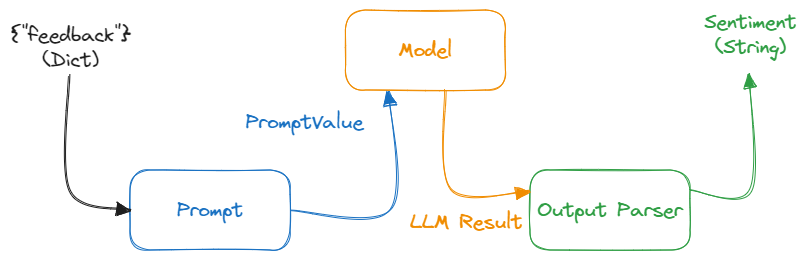

Basic usecase chai

In this chain, we create components:

import os

from langchain.prompts.prompt import PromptTemplate

from langchain_openai import AzureChatOpenAI

from langchain_core.output_parsers import StrOutputParser

# =========== Prompt

_template = """Given the feedback, analyze and detect the sentiment:

<feedback>

{feedback}

</feedback>

Only output sentiment: Negative, Neutral, Positive

Sentiment:"""

prompt = PromptTemplate.from_template(_template)

# =========== Model

os.environ["AZURE_OPENAI_API_KEY"] = ""

os.environ["AZURE_OPENAI_ENDPOINT"] = ""

os.environ["OPENAI_API_VERSION"] = ""

model_deployment_name = ""

model = AzureChatOpenAI(deployment_name=model_deployment_name)

# =========== Output Parser: We will use StrOutputParser to parse the output to String

Then, we create the chain and execute it:

# =========== Chain

sentiment_chain = prompt | model | StrOutputParser()

print(sentiment_chain.invoke({"feedback": "I found the service quite impressive, though the ambiance was a bit lacking."}))

Through this, we see how to create components and execute the chain. A notable feature is the use of the operator | to create a sequence of sequential execution operations, known as RunnableSequence.

Additionally, we can see some components of LCEL such as Prompt, ChatModel, OutputParser can "connect" with each other in the chain. This is thanks to the Runnable Interface, which you can learn more about here Interface.

Streaming

In GenAI applications, LLM/ChatModel is often the bottleneck in the system. Therefore, streaming becomes necessary in applications that use LLM during interactions with users. When using LCEL, we can easily use the stream() / astream() function to obtain results in a streaming format. Components that do not support streaming will not affect the streaming capabilities of other components.

for chunk in sentiment_chain.stream(

{"feedback": "I found the service quite impressive, though the ambiance was a bit lacking."}

):

print(chunk, end="|", flush=True)

RAG - Retrieval-Augmented Generation

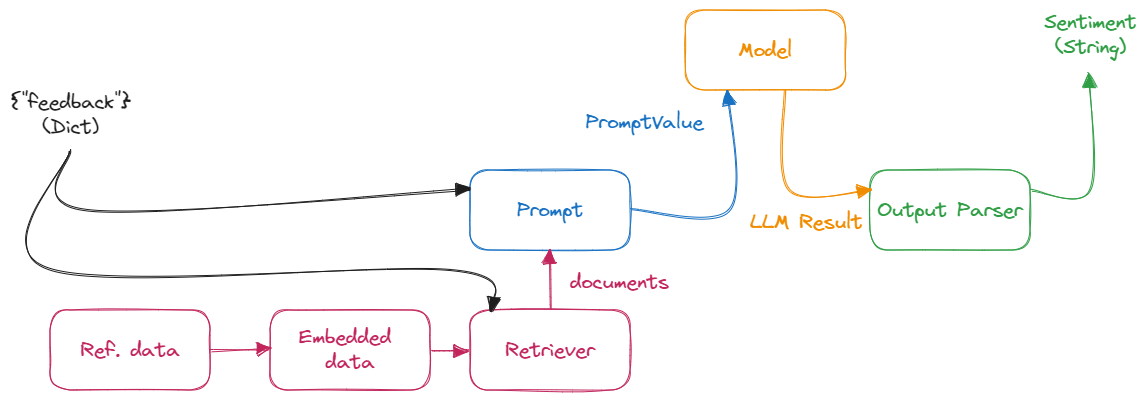

Next, we will explore a chain for Retrieval-Augmented Generation. This chain will include using a retriever to query reference documents:

Chain for Retrieval-Augmented Generation

With this chain, we create the components:

import os

from operator import itemgetter

from langchain_openai import AzureChatOpenAI, AzureOpenAIEmbeddings

from langchain.prompts.prompt import PromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_community.vectorstores import FAISS

# =========== Retriever to reference data

vectorstore = FAISS.from_texts(

["Customer Support experiences: Sarah",

"Product Service feedback: Michael"

"Website Usability: Alex"],

embedding=AzureOpenAIEmbeddings(azure_deployment=embed_deployment_name)

)

retriever = vectorstore.as_retriever()

# =========== (New) Prompt

_template = """Given the feedback, analyze and detect the PIC to resolve based on PIC list:

PIC list:

{document}

Feedback:

<feedback>

{feedback}

</feedback>

Only output the PIC

PIC:"""

prompt = PromptTemplate.from_template(_template)

# =========== Model

model = AzureChatOpenAI(deployment_name=model_deployment_name)

Then, we create the chain and execute it:

# =========== Chains

ref_data_chain = itemgetter("feedback") | retriever

pic_chain = (

{"document": ref_data_chain,

"feedback": itemgetter("feedback")}

| prompt | model | StrOutputParser()

)

print(pic_chain.invoke({"feedback": "I had trouble navigating the website to find information on product returns, which was quite frustrating. However, once I reached out to customer support, it was incredibly helpful and resolved my issue promptly."}))

In the illustration above, we see the use of the itemgetter() function to retrieve components present in the input of the preceding component in the chain, and an indispensable component in RAG applications is the Retriever.

Branching and Merging

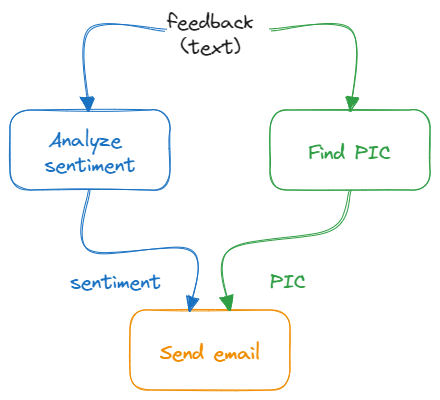

Through the two illustrations above, we have created a chain for sentiment analysis and a chain for finding the PIC - the person in charge of feedback. Next, we will execute them in parallel, saving time and increasing work efficiency:

Chain for branching and merging

We create a new chain as follows:

# =========== Custom action

def _send_email(sentiment, pic):

print(f"Send email about {sentiment} feedback for {pic}")

def send_email(_dict):

_send_email(_dict["sentiment"], _dict["pic"])

return "OK"

# =========== Chain

combine_chain = (

{"feedback": RunnablePassthrough()}

| RunnableParallel(sentiment=sentiment_chain, pic=pic_chain)

| {"sentiment": itemgetter("sentiment"), "pic": itemgetter("pic")}

| RunnableLambda(send_email)

)

combine_chain.invoke("I had trouble navigating the website to find information on product returns, which was quite frustrating. However, once I reached out to customer support, it was incredibly helpful and resolved my issue promptly.")

In the illustration above, we see:

RunnableParallel: Used to execute chains in parallel. Additionally,RunnableParallelcan also be used to adjust the output of a link to fit the input of the next link.RunnablePassthrough: Passes data to the next link. Data can be added toRunnablePassthroughthrough theassign()function.RunnableLambda: Executes a custom function as a link.

Routing Logic

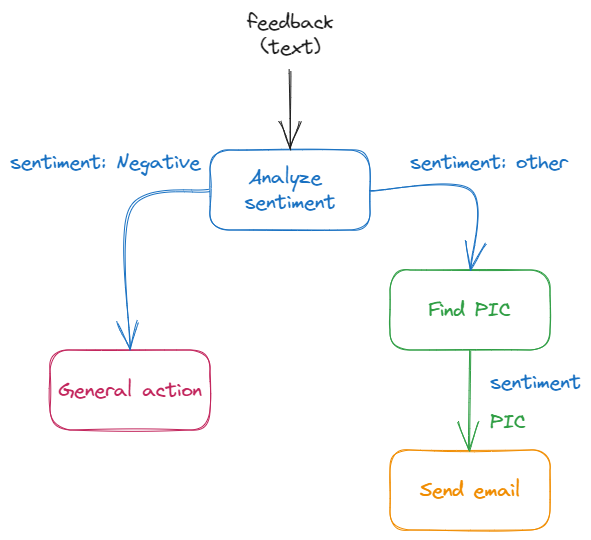

Sometimes, we need to add logic to select the appropriate processing chain. LCEL provides this capability through the use of custom functions or RunnableBranch, making it easy for you to navigate your processing flow.

Chain for Routing Logic

In this article, we modify the chain as follows:

# =========== Customer action for general case

def general_action(_dict):

print("General action is taken")

# =========== Routing logic

def route(_dict):

if "negative" in _dict['sentiment'].lower():

return negative_chain

else:

return general_chain

# =========== Define sub chains

analyze_sentiment_chain = {"feedback": RunnablePassthrough()} | sentiment_chain | StrOutputParser()

negative_chain = RunnablePassthrough.assign(pic=pic_chain) | RunnableLambda(send_email)

general_chain = RunnableLambda(general_action)

# =========== Main chain

combine_chain = (

{

"sentiment": analyze_sentiment_chain,

"feedback": RunnablePassthrough()

}

| RunnableLambda(route)

)

combine_chain.invoke("I was disappointed with the customer service received; the representative seemed uninterested and my issue remained unresolved. The overall experience was far from what I expected based on previous reviews.")

Through the illustration above, we see how to use custom functions to add routing logic to the chain. Another way is to use RunnableBranch, however, custom functions are recommended by LangChain for routing purposes. In addition, we learn about using RunnablePassthrough.assign() to add data for the next link.

Debug chain

Most components in LangChain implement the Runnable interface. This interface not only has functions that make it easy for links to interact with each other like invoke(), stream(), batch() and their asynchronous versions such as ainvoke(), astream(), abatch() but also support functions like astream_log() and astream_events().

We can update the chains in the previous demo from using invoke() to using astream_log() to see the intermediate processing steps:

async def process_log():

async for chunk in combine_chain.astream_log(

'I was disappointed with the customer service received; the representative seemed uninterested and my issue remained unresolved. The overall experience was far from what I expected based on previous reviews.',

):

print("-" * 40)

print(chunk)

asyncio.run(process_log())

Or use astream_event() to see the events that occur during the chain's execution:

async def process_events():

async for event in combine_chain.astream_events(

'I was disappointed with the customer service received; the representative seemed uninterested and my issue remained unresolved. The overall experience was far from what I expected based on previous reviews.',

version="v1",

):

print(event)

asyncio.run(process_events())

Additionally, LCEL also supports the get_graph() function to view the structure of the chain:

combine_chain.get_graph().print_ascii()

Chain Graph

Alternative configuration

A versatile feature of LCEL is configurable_alternatives(). This function allows adding custom options to the chain to increase flexibility depending on the purpose. This is extremely useful for application development and testing.

In the first illustration, we can update the components as follows:

# =========== Prompt v1

_sentiment_template = """Given the feedback, analyze and detect the sentiment:

Feedback:

<feedback>

{feedback}

</feedback>

Only output sentiment: Negative, Neutral, Positive

Sentiment:"""

# =========== Prompt v2

_sentiment_analysis_prompt = """Analyze the sentiment of the following user feedback, and categorize it as either Negative, Neutral, or Positive. Do not include any additional information or explanation in your response.

User Feedback:

"{feedback}"

Determine the sentiment of the feedback based solely on its content and context. Your response should be concise, limited to one of the three specified sentiment categories.

Sentiment: """

# =========== Configure alternative prompt

sentiment_prompt = PromptTemplate.from_template(_sentiment_template).configurable_alternatives(

ConfigurableField(id="prompt"),

default_key="sentiment_v1",

# This adds a new option, with name `sentiment_v2`

sentiment_v2=PromptTemplate.from_template(_sentiment_analysis_prompt),

)

from langchain_google_vertexai import ChatVertexAI

from google.oauth2 import service_account

...

# =========== Configure alternative model

sentiment_model = AzureChatOpenAI(deployment_name=model_deployment_name).configurable_alternatives(

ConfigurableField(id="llm"),

default_key="azureopenai",

# This adds a new option, with name `vertex` that is equal to `AzureChatOpenAI()`

vertex=ChatVertexAI(project=project_name, credentials=credentials)

)

When executing the chain, we can add customizations with the with_config() function:

print(sentiment_chain.with_config(configurable={"prompt": "sentiment_v2", "llm": "vertex"})

.invoke({"feedback": "I found the service quite impressive, though the ambiance was a bit lacking."}))

Thus, during operation, we can customize the chain's parameters according to our purposes.

Summary of notable keywords

Runnable: the “core” interface of most components, connecting components into a chain.RunnableSequence(operator|) /RunnableParallel: Allows you to create sequences of execution either sequentially or in parallel.RunnablePassthrough: helps pass data between links, can add new data with theassign()function.RunnableLambda: turns a custom function into a link in the chain.configurable_alternatives: a feature that allows adding custom options to the processing chain, enhancing flexibility for specific scenarios or A/B testing.

Find more: LCEL's other features